(*My) Procedurally Generated Music is Awful

don’t try to procedurally generate something that you can’t already create, dummy

"I don't think that this could ever create something that I wouldn't mute"

- Voxel, laying down the hard truths about music generation in general and my music generator in specific

In groovelet32.exe, I’d like for the art assets to be capable of wiggling and booping in time with the music.

That’s seemingly simple sounding, but that simple idea contains, uh, multitudes.

This can be accomplished in one of a few different ways:

Buy, commission, or write music, transcribe the music whole into the execution environment and play the music with locally available music generation tools (Tone.js in JavaScript, Helm or wwise in Unity)

Buy, commission, or write music, transcribe important moments in written music into software language, have instrument hits trigger effects.

Buy, commission, or write music, use automated analysis (Koreographer in Unity) to guess when dynamics are occcuring in the music and use those to trigger effects.

Buy, commission, or write music, output music as midi, play music while using midi engine to drive effects.

Generate music entirely in place, have instrument hits directly trigger effects.

Each strategy has its ups and downs. Notably, the first four strategies start with the simple-sounding but imposing “buy, commission, or write music”. Buying music - well, it’s hard to build a whole game around stock music - especially if music is as fundamental to the experience as it should be in a game about musical robots.

Commissioning music is simply too expensive, if I’m planning on paying my composer fairly (which I would be, if I had any money).

Writing music on my own would imply a strong upgrade in my own personal music production skills, because currently I’m operating at somewhere near the “Three Blind Mice” level. A number of my family members are talented musically - my younger brother married into a “music teacher” family - but I don’t think any of them have ever cracked open a DAW, and they’re pretty busy with their own lives, so that’s a hard tree to shake and expect that video-game ready tunes will fall out.

So that leaves me with procedurally generated music. It’s perhaps naive to think that I, a person who can’t even write a regular song, could build a computer that could write music for me, but hey - if I’m good at anything¹ , it’s programming.

A Basic Architecture for Procgen Music

Some time back, I watched this inspiring JSConf talk:

so cool

tl;dr “we built a tool called Magenta.js that allows you to tensorflow up some tunes”

I like tensorflow! I like tunes!

Setting upon the task with some zeal I managed to get Magenta.js generating tunes, slowly. I had a simple plan for how I would take slowly-generated 8-second tunes and convert them into longer songs:

Have a background server process generate 8-second three-part-tune clips

Use some basic heuristics to guess at the key signature (“C# major”) of the clips, evaluate their intensity (lots of drum hits? loads of notes?) and save them in a huge clip database.

Create a search interface for the clip database.

Have the client request clips from the server in a specific key and intensity.

Weave two or three clips together, repeating them a couple of times, to make a full “song”.

Add tools to control the requested key signature, intensity, and change instrument, tempo, and what-have-you at the last second.

Take the output and make it sound like real human music that people would listen to on purpose.

Now, there are some definite problems with this scheme. One of them is Tone.js - an unbelievably powerful synth workbench written for people who have read the entire book on Digital Signal Processing, and no other people.

I actually took and passed a senior-level course in DSP for music generation, some 12 years ago. I have less of an excuse than the average person to be absolutely garbage at attaching oscillators to things. I’m still garbage, mind you, I just have less of an excuse.

Anyways, after some serious effort, I got steps 1-6 working.

Look at that! Sliders! Tempo! Intensity! Configurable per-channel instruments and levels!

Here’s the first song that ever sounded remotely passable, produced by the system.

It’s… okay, right? Not bad. That’s where the system was a year ago.

Here’s another song from just a few days ago:

Admittedly, I haven’t been working on the procgen engine for that entire year, but it hasn’t evolved much in the interim, huh?

I figured I might be able to shape the musicality of the output with simple heuristic rules and adjustments and embellishments, but - I can’t. An extremely talented musician/producer might be able to, but as we’ve established, I’m worse at finding C than the salty pirates of landlocked Saskatchewan.

Most of my clever changes would make the output sound better… some of the time. And worse, some of the time. Sometimes, rarely, the system produces something that, if you weren’t paying a terrible lot of attention, you might confuse for real music. A lot of the time it produces something bland and amusical. About as often it produces something actively unpleasant.

One idea I’ve had is building an underlying voting system to try to clear “bad” tunes out of the system - if my music generation system is actually powered by a thousand clips that sound pretty good under most any circumstances because all of the ones that sound bad have been downvoted out of existence, well, that’s one way of doing things.

But even at it’s best, the output isn’t… terribly good.

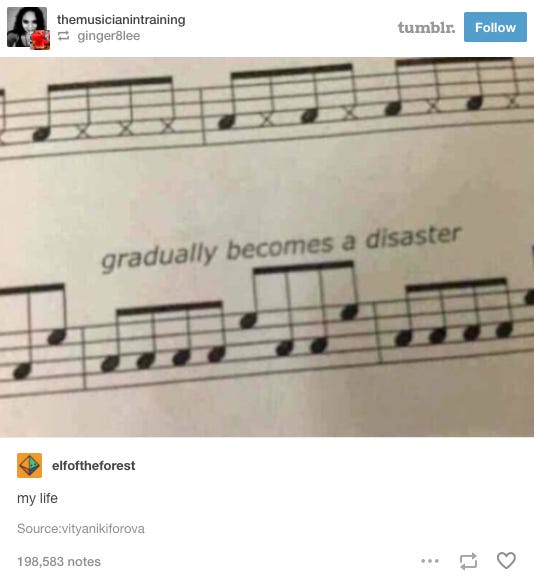

And a big part of the problem is just that composing music is hard. If you take the simplest musical style that you can think of, there’s someone online explaining in painful detail that the soundtrack to Fart Chalice IV actually takes advantage of phrygian pentameter and post-modern phrasing to create artificial dissonance between the fourth and sixteenth notes in an alternating jazz-inspired progression.

I can just barely read music. I can’t deal with that!

There isn’t a simple set of rules for what will sound good when music is involved - people are too different from one another. There are dozens of conflicting sets of rules, and because there are so many ways to break those rules and still have music come out sounding good (or follow the rules and still have music come out sounding bad) a lot of music theory isn’t so much “helpful guidelines” as it is “endless taxonomy”, and most of that taxonomy has been compiled over the last 500 years by mostly Germans and Italians.

I am literally more afraid of music theory than digital signal processing.

The Many Flaws of Procedural Generation

I love this book. I’ve read it cover-to-cover, more than once. Even the chapter on music generation - the author cleverly chose a scheme where they fudged it by picking a pentatonic scale where everything sounds pretty nice together and then bonking around pretty-much randomly. (A lot of procedurally generated/input driven music uses a technique like this).

It’s packed with cool ideas involving graphs and rules engines and a bunch of stuff that gets me actively excited. One of the contributing authors uses the term “gestalt” a few too many times.

A big part of the book was just talking about when to use procedural generation.

I’d summarize the book’s answer as “if you’re reading this, less than you imagine”.

this, for example, is probably too big a promise, unless they mean just the clouds

The benefits of generated content are obvious. Infinite content - even infinite bland content, is still infinite. This is why people still go to buffet restaurants - unlimited bad food is still pretty compelling.

So, some of the problems of procgen:

If you restrict the output-space too aggressively, your procgen output will feel bland and samey. When I restricted the output of my music generator using too many Music Theory rules, it could only produce like, 6 different songs, which all felt very bland and similar.

If you don’t restrict the output-space enough, your output will feel formless and unfair. When I turned off the Music Theory rules, the amount of garbage output got out of control.

In order to produce procedurally generated output that’s meaningful, you must understand the problem space very well, and work hard at crafting intelligent rules.

It is orders of magnitude more work than just making good output.

Or, to put it bluntly, “don’t try to procedurally generate something that you can’t already create, dummy”.

If I play with the generator long enough, I hit little patches of vaguely almost-listenable music, but most of the time the output is more of a formless mush:

Even worse, after listening to it for long stretches of time, I’d manage to convince myself that maybe I actually had something good on my hands - usually it would take either playing it for Voxel, who knows what music is supposed to sound like - or listening to some actual human-generated music on my own, to remind me that I had been listening to a droning mess of nothing for several consecutive hours and it was starting to melt my brain into a puddle.

So, You’re Giving Up, Then?

Yeah - maybe not permanently, but sometimes it’s important to know when to throw in the towel. This part of the project has, I think, failed, and it’s reaching the point where more effort is being met with diminishing returns.

I think I’m going to try a new plan from my list - “buy, commission, or write music, output music as midi, play music while using the midi data to silently drive effects”.

I’ll probably write some basic drum-loop first-fifteen-minutes-of-the-FruityLoops-tutorial jams to get the project off the ground, and then find someone competent to compose music if I ever feel like the project is within biting distance of actually shipping.

Anyways, Groovelet Music Engine, you’ve got one last chance to shine. Play yourself off! Preferably with something a little sad.

A Goodbye From the Groovelet Music Engine

… god dammit.