Okay, More Procedurally Generated Music, Then

More constrained, less creative.

So, my article on procedural generation made the rounds on reddit.com/r/gamedev and Hacker News, got some 12,000 hits, and generally stirred up some noise.

Here’s the summary of all of the different kinds of response:

Neural network generated music is getting pretty good. (Yep, particularly GAN-generated stuff can be pretty convincing.)

Procedurally generated music isn’t bad, you’re just incompetent. (Well fuck you, then)

Various shades of boring techno-utopian accelerationism. “HUMANS WILL BE REPLACED”. (Sure, I guess, but not before the climate collapses, so it probably doesn’t matter)

People agreeing in a sort of “I can’t believe you thought this would be possible” way and holding the line for traditional production methods and music theory.

People posting other, often much more successful procgen music experiments. (I mean, I’m sad that you could do this so much better than I could, but I think it’s cool anyways, so, thanks)

Multiple mentions of Autechre who are an experimental electronica band who use cool procgen tricks as part of their creative process. (Neat.)

People abusing the (pentatonic scale+randomness) angle. (yep, that is indeed a good strategy)

Someone says “as the main focus, it appears like an unnerving vacuous simulacra” which is just a real chef kiss reminder of why nobody needs to take HackerNews too seriously.

Anyways, just a lot of people with neat stuff to say.

enough to turn me into a legitimate recording artist

Move aside, Taylor Swift! There’s a new sherriff in town!

So I’m feeling a little less like abandoning this whole mess.

In defense of the generated music - it was always intended to be filler in between more carefully thought out moments with human-generated music. So long as I can keep it from being actively irritating it’s good to go.

And… honestly, as much as I want to quit working on this thing, donking around with it is pretty addictive.

(It helps that I tried to get started on some graphics code for Voxel and immediately got completely rekt by bad interactions between Pixi, react-pixi, pixi-viewport and devicePixelRatio, yeugh.)

So: I spent some time tweaking the generative algorithm to provide more structure and less randomness. One of the biggest complaints about the original was the obvious problem that it doesn’t give the ear much to hold on to - and a big part of that was how ambitious I was trying to be with the generation. Too ambitious. Two separate, potentially incompatible 8-second melodies, intertwined! When it worked, it was cool, but it didn’t work terribly often.

Now, instead of working with two separate melodies, the algorithm takes a single melodic idea and applies successive mutations to it from a set of possible mutations.

THAT’S RIGHT, IT’S TIME TO PLAY THE FUGUE

.

.

.

.

I’m sorry, I’ll show myself out.

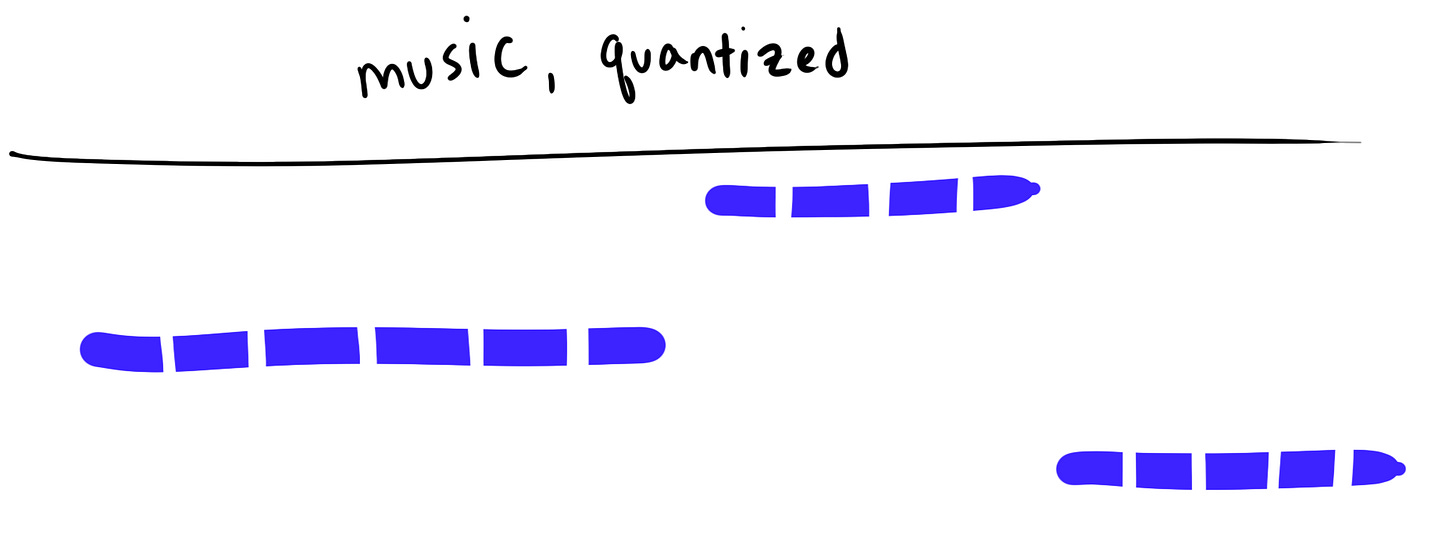

I’m also providing a bassline and countermelody, in both cases using the surprisingly successful technique of quantization-with-random-removal.

Quantization With Random Removal

This may seem embarassingly basic to music producers, but to me, a music noob of the highest order, this seems like I have unlocked only the darkest and most arcane secrets. (For serious, where does one learn this stuff? If you’ve got good resources hook me up.)

The result provides music that’s significantly more stable - so stable, in fact, that it goes a little too far in the other direction and comes off feeling pretty flat and repetitive.

Here’s the playlist from the most recent session.. One big difference between this session and others is that this is just… four successive runs. With a lot of previous runs, I’d have to run it six or seven times and throw out all of the runs that were actively terrible to get to something merely forgettable - whereas these upgrades produce a system that allows us to get directly to forgettable much more reliably.

Ideas for the future include:

more bold and exciting convolution patterns

stacked convolutions?

instead of “random removal” I might be able to force rhythms with more concrete removal patterns?

improved instruments - I upgrade from “just using square waves” to “using a sample-based instrument library”, which, while useful and nice, still doesn’t sound terribly good. A co-worker posted a link to a complete javascript implementation of the Yamaha DX7 which could be ported to Tone.js to provide, I think, a much improved sound overall and a nice early-nineties vibe to the tunes

improved drums - the whole drumkit is currently being simulated by a noise synth and some pulses for hi-hats, and I could definitely try to build out something using samples or something there.

learning to make some real music I guess?!?! I’ve moved from “Three Blind Mice” all the way to “Hot Cross Buns”, which I’m assured is a completely different and much more compelling tune. Also apparently Every Good Boy Destroys Faces? that doesn’t seem right

Okay, it is a few days later and I’ve used the Oramics Sample Library and Tone.Sampler to build a loose approximation of a Roland CompuRythm CR-78. It sounds noticeably better.

Also implemented a drum-drunkifier using the extremely simple algorithm that Adam makes fun of at the beginning of this video: snare goes leetle bit back, every second hi-hat goes leetle bit forward. Techniques like this are good for taking boring, stable, computer-perfect drumlines and making them feel more human - I hope.

Having gone to all of this trouble, I can also just use this single sound clip of a cowbell to play a cowbell sound effect.

Huh, it worked. I wonder what would happen if I….

Yes! YES!! PHENOMENAL COSMIC POWER! I’ve got a fever!

I guess I should also post an up-to-date tune generated, this time using the drumkit and the new “fuzz-electric” guitar that I booped together using the guitar-electric sample and some stacked effects.

Yeah, that’s pretty backgroundy. I’m tuning it out as we speak.

Motherflippin’ Dynamically Generated Harmonics

A few days later still, and, uh, harmonics. I’ve been having trouble with harmonics. They’re hard.

I tried a few different naive harmonic-generation strategies that all went terribly.

Strategy 1: Take every “rhythm” note and simply convert it into a major chord. Result: this sounds very bad.

Strategy 2-8: A variety of variations on Strategy 1 where I try out various different chord-shaped transformations on the rhythm line. Result: still very bad.

Strategy 9: Take the tonic key of the generated tune and play a bog-simple I-V-vi-IV progression with it behind the music. Result: the chords on their own sound nice, but not when played with the actual melody/rhythm.

Strategy 10: The above, but synced with the drumbeat to play kind of in-time with the music. Result: still pretty bad, lots of ugly sounds when the chords disagree with the melody.

So, strategy 11 is a complete rethink, based in no small part on listening to a very pleasant music teacher on youtube.

So here’s the algorithm:

Calculate any point in the generated music where the rhythm and the melody track overlap.

Smoosh these overlaps together - if C# and G# overlap six times in a row, we convert that into one C#-G# overlap for the entire stretch.

Search the tonal.js chord library for chords containing the overlapping notes:

If a chord was found, play it (somewhere else on the keyboard)

If a chord wasn’t found, just play the two notes again, (somewhere else on the keyboard).

And the result is… pretty listenable. It kind of works!

anyways, this has been too serious for too long

Anyways, I also recorded myself going “DOOOOOOO” and loaded it into the sampler, to create this delightful arrangement:

oh yeah, that’s the good stuff