Groovelet v.2df0c84: Whatever Comes Before Alpha

I've launched something that doesn't DO anything.

Okay, status update. After spending the better part of two years donking around with engines ( Trying out Electron, Trying out Unity, Trying out Elixir ) - and throwing a lot of engineering cycles at some extremely hard problems - distributed grid synchronization (I can talk about that more later but yikes) and procedural music generation ( 1, 2 ) - I’m starting to feel a lot of pressure to stop donking around with complicated tech and just get something in front of people.

Surely all of this learning and experimentation will pay off down the road, but at some point a product has to form.

So I put away all of my fun new toys, opened a new repo, and just started hacking with node. I have in the realm of “a loose decade” of JavaScript programming experience, so this is at the very least an environment that I feel like I can make a lot of forward progress in, quickly. (Previous projects in this space include: CardChapter, the VRChat backend, and Horse Drawing Tycoon )

I’ve made a lot of forward progress very quickly, in no small part because a lot of the stuff I’ve been working on is already half-present in previous iterations of the project. Also my nights and weekends have been pretty slow lately. Nothing good on TV. Few social obligations.

Anyways, the goal of the current spear of development has been getting the project to a place with a well-defined deployment pipeline.

Single-Box Deploy

One thing that’s been kind of a going concern in the deployment space is the stark, stark difference in deployment environments between a hobby project and a popular product. I have spent a bunch of time faffing around with Kubernetes and Redis Cluster, which are amazing technologies for using big sacks of cash to deliver global scale products, but there’s a really good chance that for the first couple of years of Groovelet development this whole product is going to exist on One Server, That’s It. Namely, the dedicated server that I pay for, and use for all of my utility-grade personal projects.

Let’s go to the fake whiteboard, and I’ll show you how my dedicated server works:

So, a lot of different domain names point, through cloudflare, to my server. In order to keep management and maintenance of this long-running (now 4 years old) server more-or-less neat and tidy and orderly, there are only a few things that it actually gets to run: docker, and nginx. If I want to run other kinds of project long-term on this computer, they’ve gotta get containerized and then pointed-to-by-a-nginx-rule.

In the past, it’s run stuff like InspIRCd, ownCloud, Mastodon - but I didn’t actually like any of those. Eventually I’ve settled on just the two services that I really care to maintain - Discourse and Icecast. (Discourse is, in my opinion, the finest forum product that currently exists, absolutely dominating a market that’s almost completely dead. My biggest quibble with them is that their “civilized discussion” marketing tagline comes with a reaaaal subtle eurocentric colonialist bias that doesn’t sound so sharp in this-the-year-2021, and my second biggest quibble with them is that the product is designed for large communities of yahoos, so when you’re pointing it at a small community of people you trust, you need to turn off like 80% of the safety features lest one of your friends complains that they aren’t allowed to post with just emoji. As for Icecast - it’s old and complicated, but absolutely awesome to be able to stream my personal radio station to any device or speaker, anywhere. )

All of the system’s docker logs and syslogs are mooshed together and sent off to Papertrail for analysis. I used to love Papertrail. Then they got bought by Solarwinds. Now I only kinda like Papertrail. In the near future, I plan to just set up a cheapo VPS sidecar box for FOSS logs-and-stats, using ElasticSearch, Kibana, Victoria, and Grafana - although that introduces a whole “who watches the watchman” problem when it comes time to determine how I monitor THAT box.

As it turns out, this makes the machine, at least in my opinion, fabulously easy to operate. Keep up to date with security patches, make sure docker and nginx are running, boom. Adding a new service to the system is as easy as a new nginx configuration file, and docker is a self-contained little black box which monitors and restarts any services within.

So this is the environment we’re deploying against. It’s not much. At work, even with a small job, I’d generally start with a well-defined cluster of inexpensive VPSs that each perform a different role rather than smashing the whole project on to one computer.

But the alternative would be spending a lot more money - and one of the rules of hobby development is that you’ve gotta keep those costs contained. A dedicated server packed to the brim with containers is extremely cost effective, in my opinion.

Anyways: designing a deploy for this environment. I decided on docker-compose - just a nice tidy little box of interconnected services that can also be managed with docker. The backing databases are going to be redis and mongo - I’ve done some experimentation with Postgres, CouchDB, and CockroachDB, (see also: the years-long eternal experimentation phase at the beginning of the project) and … dang, I’ve just grown to know redis and mongo like old friends at this point. Redis for short-term storage, mongo for anything that hits the disk, let’s gooooo. I reserve the right to think that CockroachDB is coolbeans, and also totally senseless overengineering for a single-computer deploy.

That allows us to just drop our product and its backing services on the server and connect to it with nginx. Nice, right?

The Berenstain Bears and Too Many Proxies

While I was working on this thing, I was thinking that instead of building it as a full-on-monolith, like some of my earlier projects, I’d prefer to compose it out of… well, I refuse to say microservices on principle, but I wanted to take some effort to make the backend, frontend, and marketing website independent self-contained units.

Separating the backend and frontend are long-standing concerns, but a separate marketing website? Why bother with that? Well - honestly I’ve just found that the concerns around delivering your website’s front page and your concerns around delivering every other part of your website are extremely different. Heck, Unbounce built a whole business model around your “landing page”.

That split is a job for nginx, again. Well, it would be, except one of my teammates introduced me to OpenResty, which is just… nginx but way, way better? One of the things about nginx is, like all successful open-source projects, they’re struggling to monetize, so they’ve actually locked a lot of their juiciest features behind a closed source product and subscription paywall, Nginx Plus. And, like with Fedora/RHEL and CentOS, the community has just… taken the open-source product and run with it to make something great. So, OpenResty. As a bonus, as the project expands in scope and (hopefully) popularity, I can learn how to use OpenResty’s more advanced filtering techniques to act as a pretty skookum web application firewall, which is good, because Cloudflare is gradually turning into the industry equivalent of a F2P game.

I’m extremely proud of this graphic.

But that openresty configuration becomes, like, a whole part of the application. The dumb, lightweight nginx layer that’s running on my personal server, I don’t want that to get all bogged down in configurations that are wildly specific to this project and this project alone.

So the proxy, and its configurations, go into a container.

Which feels relatively satisfying, but now, between Cloudflare, nginx, and openResty we’re now juggling three proxies. Is that too many proxies? Perhaps. It’s impossible to know for sure. Maybe yes.

Automated Builds: It’s Github!

There are way more details that I’ve hammered out in the past week - I’m running builds and automated testing with Github Actions, which… oh no. I’m sorry to Travis and CircleCI and all of their competitors but Github Actions is free and good, and Microsoft-Github’s slow bid to control the development space is going surprisingly well. I can’t help but notice that Github’s project management tools are definitely expanding into the realm of compelling competition for commercial bug-trackers, wikis, and organization tools. Github’s gonna eat the whole world.

Between that and some glue-code scripting, I have got a push-button deploy-to-prod with a nice quick build cycle. Nice.

Authentification

With most of the environment at least semi-sorted, the next thing to work on is auth.

Oh boy, authentication. If you’re a backend programmer, some 75% of your job is going to be authentication and environments, forever and ever and ever. At this point I’ve been dealing with e-mail-and-password with salted hash since the beginning of time.

Here are some things I hate about classic authentication:

email

The User Forgot Their Password, Now What

electronic mail

E-Mail

oops, the user used a pwned password and lost everything

mail

It’s still so, so easy to create mountains of low-effort accounts

These are only a few, but there are so many more.

Sending users e-mails is a gargantuan pain in the ass. I generally turn to Amazon SES for this - I don’t think that there’s a more competititvely priced alternative out there.

Once we have an e-mail provider, we have to carefully manage our e-mail reputation - users providing us with false e-mail addresses is going to hurt that rep, so we need to engage in all sorts of filtering and fussing to avoid sending emails to invalid locations. If users fat-finger their e-mail address, or our e-mail service is DOWN while they’re creating their account, those users are probably lost forever.

Oh, and our users’ password etiquette is absolutely abysmal. And this is fair - I have trash-tier passwords for all kinds of websites that I don’t care about, because if someone uses cred-stuffing to break into my farts.org account and create havoc, that’s… really more farts.org’s problem than my problem. I don’t care about my farts.net account, but THEY do. From the point-of-view of a service provider, though, this can be really frustrating, as cred-stuffers can accumulate an awful lot of unimportant identites to attack you with.

This e-mail management / password-select / account sign-up dance is a huge, huge wall between users and my service. I definitely abandon websites that ask me to log in up-front, and frequently.

Which brings me to the “wacky ideas” phase of authentication. Do you know what people say about authentication, they say “you’re definitely smart enough to get this right on your own, build whatever you want without regard for standards”. I’m pretty sure that’s what people say.

Players Have Two Stats, Bear and Criminal

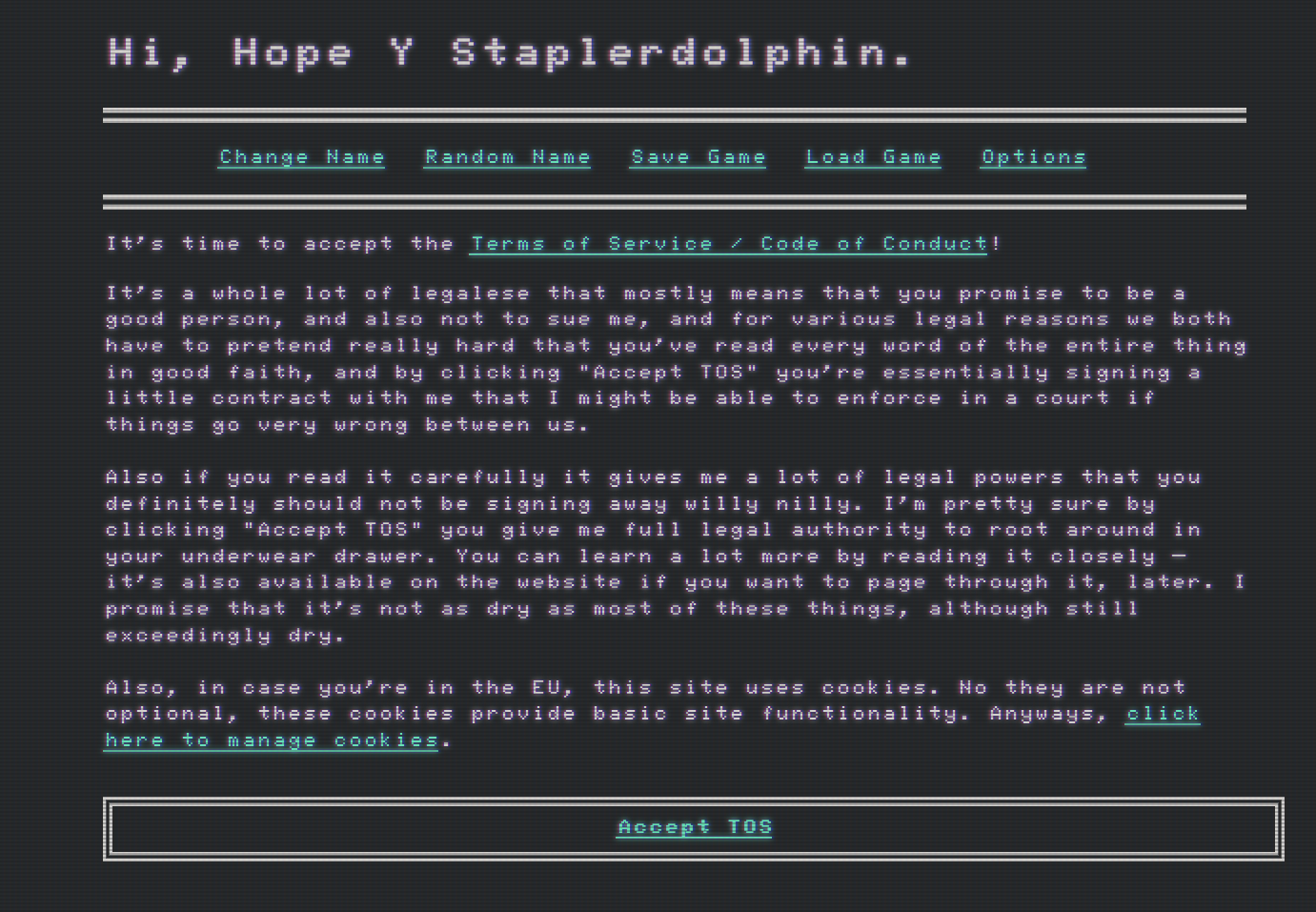

The plan is as follows: users must complete a CAPTCHA, their computer must compute a relatively easy hashcash challenge, and they must agree to a TOS that they’ve only barely read. No username, no e-mail, no password.

Once that’s done, we generate a temporary (Redis-only) account for the player, with a generated name and password, which are stored in localStorage. Now every time they come back, they’re logged in.

All well-and-good unless they want to log in from a second computer - but that’s what “save” and “load” are for. Save generates a creds file (which the user can manage however they darn well please) and load loads a creds file, logging in.

I think it’s a good idea. Voxel thinks it’s insane and byzantine. She’s probably right.

Once that’s done, we can gradually give the player’s account more powers as they prove that they’re a real person. This accepts that a user’s first interaction with our product is very likely to be a throwaway interaction, and respects that we need to make it as seamless as possible, only hitting them with more responsibilities when they want to upgrade. Maybe, once they decide to stay around a while, we can ask for an e-mail address or even a phone number to use for account recovery.

And That’s It, That’s All I’ve Got For Now

That’s where I am after 2 weeks: just the skeleton of an environment and the bones of an authentication system. It’s online, even. You can see the first build, at https://groovelet.com/ , right now, if you’d like: but all you can do is create a temporary account and nothing else. This may be too M even for an MVP.

Wow, this was a long post. I had to trim some out, even, to fit it into Substack’s EMAIL RULES. Thanks… thanks for reading all of this. If you got this far. If you get all of the way to the end ping me on twitter and I’ll send you an artisanal hand-selected stupid meme for your trouble.